Overview

The overarching goal of this workshop is to gather researchers, students, and advocates who work at the intersection of accessibility, computer vision, and autonomous systems. We plan to use the workshop to identify challenges and pursue solutions for the current lack of shared and principled development tools for data-driven vision-based accessibility systems. For instance, there is a general lack of vision-based benchmarks and methods relevant to accessibility (e.g., people with disabilities and mobility aids are currently mostly absent from large-scale datasets in pedestrian detection). Our workshop will provide a unique opportunity for fostering a mutual discussion between accessibility, computer vision, and robotics researchers and practitioners.

Invited Speakers

Schedule

| Times (PST) | |

| 13:00 | Opening Remarks |

| 13:10-13:40 | Rainer Stiefelhagen |

| 13:40-14:10 | Jennifer Mankoff |

| 14:10-14:20 | Challenge Winner Announcements |

| 14:20-14:40 | Challenge Winner Talks |

| 14:40-15:00 | Poster Highlights |

| 15:05-15:45 | Hybrid Poster Session (first 20 minutes) + Coffee Break (last 20 minutes) |

| 15:45-16:15 | Smit Patel |

| 16:15-16:45 | Richard Ladner, "Including Accessibility and Disability in an Undergraduate CV Course" |

| 16:45-17:30 | Concluding Remarks, Poster Session #2 |

Abstracts

Organizers

Challenge Organization

Challenge

As an updated challenge for 2023, we release the following:

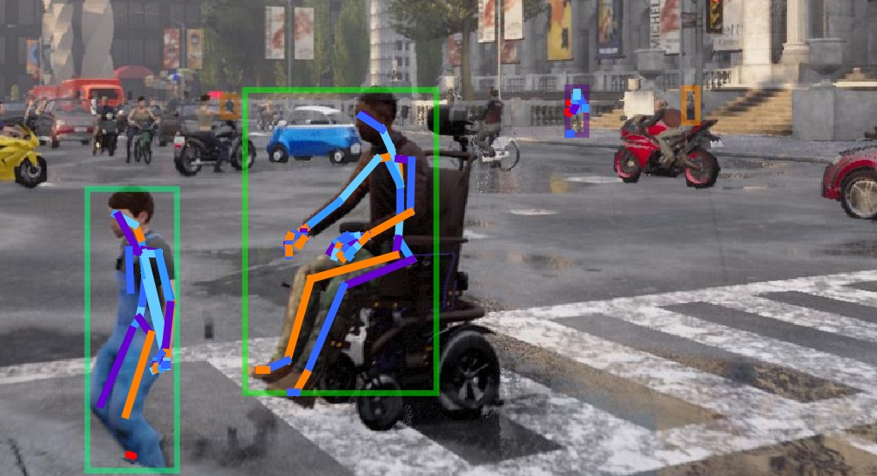

The challenge builds on our prior workshop's synthetic instance segmentation benchmark with mobility aids (see Zhang et al., X-World: Accessibility, Vision, and Autonomy Meet, ICCV 2021 bit.ly/2X8sYoX). The benchmark contains challenging accessibility-related person and object categories, such as `cane' and `wheelchair.' We aim to use the challenge to uncover research opportunities and spark the interest of computer vision and AI researchers working on more robust visual reasoning models for accessibility.

The team with the top performing submission will be invited to give short talks during the workshop and will receive a financial award of $500 and an OAK—D camera (We thank the National Science Foundation, US Department of Transportation's Inclusive Design Challenge and Intel for their support for these awards)

- Training, validation, and testing data, which can be found in this link

- An evaluation server for instance segmentation and for pose estimation.

The challenge builds on our prior workshop's synthetic instance segmentation benchmark with mobility aids (see Zhang et al., X-World: Accessibility, Vision, and Autonomy Meet, ICCV 2021 bit.ly/2X8sYoX). The benchmark contains challenging accessibility-related person and object categories, such as `cane' and `wheelchair.' We aim to use the challenge to uncover research opportunities and spark the interest of computer vision and AI researchers working on more robust visual reasoning models for accessibility.

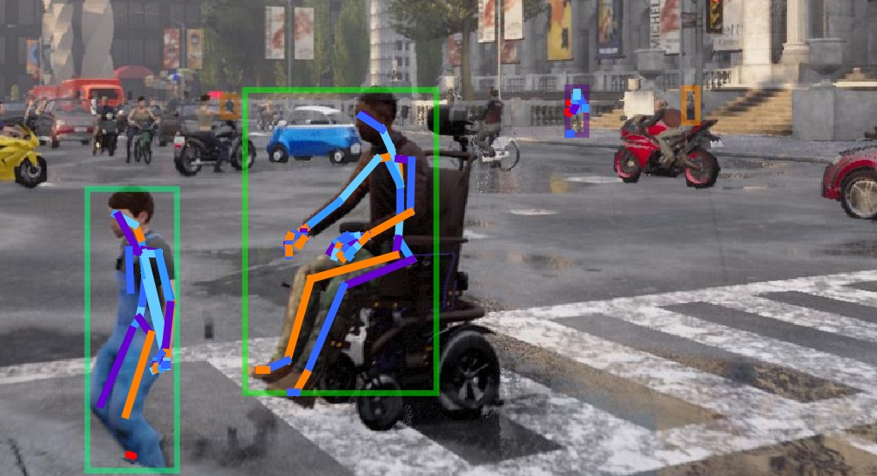

An example from the instance segmentation challenge for perceiving people with mobility aids.

An example from the pose challenge added in 2023.

The team with the top performing submission will be invited to give short talks during the workshop and will receive a financial award of $500 and an OAK—D camera (We thank the National Science Foundation, US Department of Transportation's Inclusive Design Challenge and Intel for their support for these awards)

Call for Papers

We encourage submission of relevant research (including work in progress, novel perspectives, formative studies, benchmarks, methods) as extended abstracts for the poster session and workshop discussion (up to 4 pages in CVPR format, not including references). CVPR Overleaf template can be found here. Latex/Word templates can be found here. Please send your extended abstracts to mobility@bu.edu. Note that submissions do not need to be anonymized. Extended abstracts of already published works can also be submitted. Accepted abstracts will be presented at the poster session, and will not be included in the printed proceedings of the workshop.

Topics of interests by this workshop include, but are not limited to:

- AI for Accessibility

- Accessibility-Centered Computer Vision Tasks and Datasets

- Data-Driven Accessibility Tools, Metrics and Evaluation Frameworks

- Practical Challenges in Ability-Based Assistive Technologies

- Accessibility in Robotics and Autonomous Vehicles

- Long-Tail and Low-Shot Recognition of Accessibility-Based Tasks

- Accessible Homes, Hospitals, Cities, Infrastructure, Transportation

- Crowdsourcing and Annotation Tools for Vision and Accessibility

- Empirical Real-World Studies in Inclusive System Design

- Assistive Human-Robot Interaction

- Remote Accessibility Systems

- Multi-Modal (Audio, Visual, Inertial, Haptic) Learning and Interaction

- Accessible Mobile and Information Technologies

- Virtual, Augmented, and Mixed Reality for Accessibility

- Novel Designs for Robotic, Wearable and Smartphone-Based Assistance

- Intelligent Assistive Embodied and Navigational Agents

- Socially Assistive Mobile Applications

- Human-in-the-Loop Machine Learning Techniques

- Accessible Tutoring and Education

- Personalization for Diverse Physical, Motor, and Cognitive Abilities

- Embedded Hardware-Optimized Assistive Systems

- Intelligent Robotic Wheelchairs

- Medical and Social and Cultural Models of Disability

- New Frameworks for Taxonomies and Terminology

Important workshop dates

- Updated challenge release: 3/18/2023

- Workshop abstract submission deadline: 6/11/2023 (11:59PM PST, please submit extended abstracts via email to mobility@bu.edu)

- Challenge submission deadline: 6/11/2023

- Abstract notification: 6/13/2023

- Challenge winner announcement: 6/18/2023